Edit: This post is pretty old and Elasticsearch/Logstash/Kibana have evolved a lot since it was written.

Part 1 of 4 – Part 2 – Part 3 – Part 4

Have you heard of Logstash / ElasticSearch / Kibana? I don’t wanna oversell it, but it’s AMAZING!

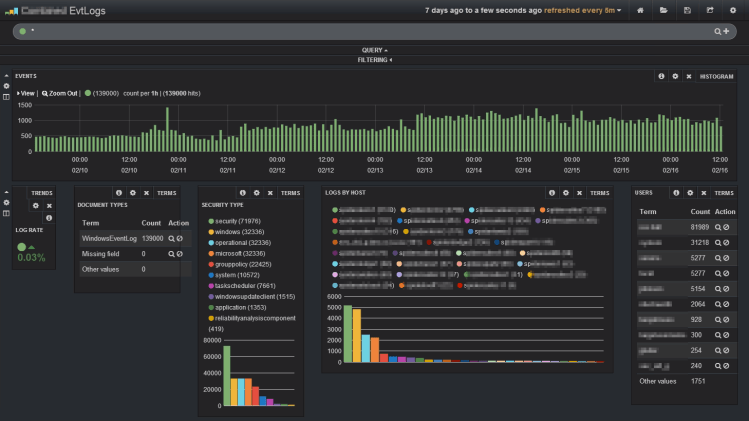

I’ll start with a screenshot. You know you want this. I have to blur a few things to keep some 53cr375 about my environment.

This is my configuration for collecting Windows event logs. I’m still working out the differences between the Windows XP, Server 2008R2, and Windows 7 computers I’m collecting logs from, but this has already proven very useful.

If you don’t know about it yet, you should really go watch this webinar. http://www.elasticsearch.org/webinars/introduction-to-logstash/ I’ll wait.

Before I start into a dump of my install notes, I’ll link a few resources.

The getting started guide is a great place to start

http://logstash.net/docs/1.3.3/tutorials/getting-started-centralized

I based my setup very heavily upon this guy’s setup. Part 1, Part 2, Part 3

Logstash / Elasticsearch are moving so quickly this is likely obsolete before I’m done writing it. Go to the sources for more current info.

http://www.elasticsearch.org/overview/

http://www.elasticsearch.org/overview/logstash/

http://www.elasticsearch.org/overview/elasticsearch/

http://www.elasticsearch.org/overview/kibana/

$10 for the PDF version. Buy it, print it. 3 hole punch it and stuff it in a binder. Write on it. Throw it in frustration. Learn to love it.

http://www.logstashbook.com/

I ran into a couple issues. Turns out I wasn’t alone. Google showed me the way.

https://groups.google.com/forum/m/#!topic/logstash-users/XXZLmB2TeNo

INSTALL LINUX

Install Debian. I like using the netinstall. it’s small and you always get up-to-date packages

http://www.debian.org/distrib/netinst

Install, you get to software selection, I only selected “SSH server” and “Standard system utilities”

When prompted during the install I made myself a “raging” standard user account

[text]

raging@logcatcher:~$

uname -a; lsb_release -a

Linux logcatcher 3.2.0-4-amd64 #1 SMP Debian 3.2.51-1 x86_64 GNU/Linux

No LSB modules are available.

Distributor ID: Debian

Description: Debian GNU/Linux 7.3 (wheezy)

Release: 7.3

Codename: wheezy

[/text]

INSTALL SUDO

I don’t trust myself to run as root. I like using sudo instead of running as root. I change to the root user, install sudo, add my raging account to the sudo group, and list the current IP address. If this was more than just a temporary setup, I’d set a static IP or DHCP reservation.

[text]

su root

apt-get install sudo

usermod -a -G sudo raging

ip address show

[/text]

And this is about as far as I bother on the local console. From here on I’ll SSH in from a more comfortable computer.

INSTALL JAVA

You’ll have to get the correct download link for java. I guarantee you the authparam for that link has long since expired. More info: https://wiki.debian.org/JavaPackage

[text]

sudo vi /etc/apt/sources.list

[/text]

You’ll need to add the line

[text]

deb http://ftp.us.debian.org/debian/ wheezy contrib

[/text]

[text]

sudo apt-get install java-package

wget http://download.oracle.com/otn-pub/java/jdk/7u51-b13/jre-7u51-linux-x64.tar.gz?AuthParam=1391313765_3cdad81c5f3af7b5ef5ce047211e4c2d -O jre-7u51-linux-x64.tar.gz

make-jpkg jre-7u51-linux-x64.tar.gz

sudo dpkg -i oracle-j2re1.7_1.7.0+update51_amd64.deb

sudo update-alternatives –auto java

[/text]

INSTALL ELASTICSEARCH

Download and install elasticsearch. There’s probably a newer version available.

[text]

wget https://download.elasticsearch.org/elasticsearch/elasticsearch/elasticsearch-0.90.10.deb

sudo dpkg -i elasticsearch-0.90.10.deb

[/text]

Make the elasticsearch data directory and set permissions

[text]

sudo mkdir /data

sudo mkdir /data/logs

sudo mkdir /data/data

sudo chown -R elasticsearch:elasticsearch /data/logs

sudo chown -R elasticsearch:elasticsearch /data/data

sudo chmod -R ug+rw /data/logs

sudo chmod -R ug+rw /data/data

[/text]

Configure elasticsearch

[text]

sudo vi /etc/elasticsearch/elasticsearch.yml

[/text]

Uncomment / Update the values to something that makes sense for your enviroment

[text]

Change the value of cluster.name to “logcatcher”

Change the value of node.name to something memorable like “logstorePrime”

Change the value of path.data to your data directory – mine was /data/data

Change the value of path.logs to your logs directory – mine was /data/logs

[/text]

I’ll just link to the source for this part

www.elasticsearch.org/tutorials/too-many-open-files/

[text]

sudo vi /etc/security/limits.conf

Add:

elasticsearch soft nofile 32000

elasticsearch hard nofile 32000

sudo vi /etc/pam.d/su

Uncomment pam_limits.so

sudo service elasticsearch restart

[/text]

If you end up with garbage data in elasticsearch, this is how to clear all indexes

[text]

curl -XDELETE ‘http://127.0.0.1:9200/_all’

[/text]

http://www.elasticsearch.org/guide/en/elasticsearch/reference/current/indices-delete-index.html

INSTALL REDIS

Shamelessly stolen from http://redis.io/topics/quickstart

[text]

wget http://download.redis.io/releases/redis-2.8.4.tar.gz

tar xzf redis-2.8.4.tar.gz

cd redis-2.8.4

make

sudo cp src/redis-server /usr/local/bin/

sudo cp src/redis-cli /usr/local/bin/

sudo mkdir /etc/redis

sudo mkdir /var/redis

sudo cp utils/redis_init_script /etc/init.d/redis_6379

sudo cp redis.conf /etc/redis/6379.conf

sudo mkdir /var/redis/6379

sudo update-rc.d redis_6379 defaults

sudo vi /etc/redis/6379.conf

Set daemonize to yes

sudo service redis_6379 start

[/text]

INSTALL LOGSTASH

Download logstash and copy to /opt/logstash/

There’s likely a newer version available

[text]

wget http://download.elasticsearch.org/logstash/logstash/logstash-1.3.3-flatjar.jar

sudo mkdir /opt/logstash

sudo cp logstash-1.3.3-flatjar.jar /opt/logstash/logstash.jar

sudo mkdir /etc/logstash

[/text]

If you’re only running one computer for logstash / elasticsearch, you might only need one logstash instance and no redis. I’m planning to scale this to 2 computers to have failover.

I don’t know if you want to call the first instance a collector or shipper or what.

[text]

sudo vi /etc/logstash/logstash.conf

[/text]

logstash.conf

[js]

input {

tcp {

type => “WindowsEventLog”

port => 3515

codec => “line”

}

}

filter{

if [type] == “WindowsEventLog” {

json{

source => “message”

}

if [SourceModuleName] == “eventlog” {

mutate {

replace => [ “message”, “%{Message}” ]

}

mutate {

remove_field => [ “Message” ]

}

}

}

}

output {

redis { host => “127.0.0.1” data_type => “list” key => “logstash” }

}

[/js]

I’m going to call the second instance logstash-index since it does the heavy part of the processing and shoves it into elasticsearch.

[text]

sudo vi /etc/logstash/logstash-index.conf

[/text]

logstash-indexer.conf

[js]

input {

redis {

host => “127.0.0.1”

data_type => “list”

key => “logstash”

codec => json

}

}

filter {

grok {

match => [ “host”, “^(?<host2>[0-2]?[0-9]?[0-9].[0-2]?[0-9]?[0-9].[0-2]?[0-9]?[0-9].[0-2]?[0-9]?[0-9]):.*” ]

}

mutate {

replace => [ “host”, “%{host2}” ]

}

mutate {

remove_field => [ “host2” ]

}

if [type] == “WindowsEventLog” {

mutate {

lowercase => [ “EventType”, “FileName”, “Hostname”, “Severity” ]

}

mutate {

rename => [ “Hostname”, “source_host” ]

}

mutate {

gsub => [“source_host”,”.example.com”,””]

}

date {

match => [ “EventTime”, “YYYY-MM-dd HH:mm:ss” ]

}

mutate {

rename => [ “Severity”, “eventlog_severity” ]

rename => [ “SeverityValue”, “eventlog_severity_code” ]

rename => [ “Channel”, “eventlog_channel” ]

rename => [ “SourceName”, “eventlog_program” ]

rename => [ “SourceModuleName”, “nxlog_input” ]

rename => [ “Category”, “eventlog_category” ]

rename => [ “EventID”, “eventlog_id” ]

rename => [ “RecordNumber”, “eventlog_record_number” ]

rename => [ “ProcessID”, “eventlog_pid” ]

}

if [SubjectUserName] =~ “.” {

mutate {

replace => [ “AccountName”, “%{SubjectUserName}” ]

}

}

if [TargetUserName] =~ “.” {

mutate {

replace => [ “AccountName”, “%{TargetUserName}” ]

}

}

if [FileName] =~ “.” {

mutate {

replace => [ “eventlog_channel”, “%{FileName}” ]

}

}

mutate {

lowercase => [ “AccountName”, “eventlog_channel” ]

}

mutate {

remove => [ “SourceModuleType”, “EventTimeWritten”, “EventReceivedTime”, “EventType” ]

}

}

}

output {

elasticsearch {

host => “127.0.0.1”

cluster => “logcatcher”

}

}

[/js]

Instead of thinking about it too much, I’ll use the init script from http://sysxfit.com/blog/2013/07/16/logging-with-logstash/

Since I’ve got 2 logstash instances, I’ll make 2 init scripts.

[text]

sudo vi /etc/init.d/logstash

[/text]

[bash]

#! /bin/sh

### BEGIN INIT INFO

# Provides: logstash

# Required-Start: $remote_fs $syslog

# Required-Stop: $remote_fs $syslog

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: Start daemon at boot time

# Description: Enable service provided by daemon.

### END INIT INFO

. /lib/lsb/init-functions

name=”logstash”

logstash_bin=”/usr/bin/java — -jar /opt/logstash/logstash.jar”

logstash_conf=”/etc/logstash/logstash.conf”

logstash_log=”/var/log/logstash.log”

pid_file=”/var/run/$name.pid”

start () {

command=”${logstash_bin} agent -f $logstash_conf –log ${logstash_log}”

log_daemon_msg “Starting $name” “$name”

if start-stop-daemon –start –quiet –oknodo –pidfile “$pid_file” -b -m –exec $command; then

log_end_msg 0

else

log_end_msg 1

fi

}

stop () {

log_daemon_msg “Stopping $name” “$name”

start-stop-daemon –stop –quiet –oknodo –pidfile “$pid_file”

}

status () {

status_of_proc -p $pid_file “” “$name”

}

case $1 in

start)

if status; then exit 0; fi

start

;;

stop)

stop

;;

reload)

stop

start

;;

restart)

stop

start

;;

status)

status && exit 0 || exit $?

;;

*)

echo “Usage: $0 {start|stop|restart|reload|status}”

exit 1

;;

esac

exit 0

[/bash]

[text]

sudo vi /etc/init.d/logstash-index

[/text]

[bash]

#! /bin/sh

### BEGIN INIT INFO

# Provides: logstash-index

# Required-Start: $remote_fs $syslog

# Required-Stop: $remote_fs $syslog

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: Start daemon at boot time

# Description: Enable service provided by daemon.

### END INIT INFO

. /lib/lsb/init-functions

name=”logstash-index”

logstash_bin=”/usr/bin/java — -jar /opt/logstash/logstash.jar”

logstash_conf=”/etc/logstash/logstash-index.conf”

logstash_log=”/var/log/logstash-index.log”

pid_file=”/var/run/$name.pid”

start () {

command=”${logstash_bin} agent -f $logstash_conf –log ${logstash_log}”

log_daemon_msg “Starting $name” “$name”

if start-stop-daemon –start –quiet –oknodo –pidfile “$pid_file” -b -m –exec $command; then

log_end_msg 0

else

log_end_msg 1

fi

}

stop () {

log_daemon_msg “Stopping $name” “$name”

start-stop-daemon –stop –quiet –oknodo –pidfile “$pid_file”

}

status () {

status_of_proc -p $pid_file “” “$name”

}

case $1 in

start)

if status; then exit 0; fi

start

;;

stop)

stop

;;

reload)

stop

start

;;

restart)

stop

start

;;

status)

status && exit 0 || exit $?

;;

*)

echo “Usage: $0 {start|stop|restart|reload|status}”

exit 1

;;

esac

exit 0

[/bash]

Of course now to make that executable, register, and start.

[text]

sudo chmod +x /etc/init.d/logstash

sudo chmod +x /etc/init.d/logstash-index

sudo /usr/sbin/update-rc.d -f logstash defaults

sudo /usr/sbin/update-rc.d -f logstash-index defaults

sudo service logstash start

sudo service logstash-index start

[/text]

INSTALL KIBANA – OPEN CONFIGURATION

This is really only suitable for a trusted network.

For secured setup, see http://www.ragingcomputer.com/2014/02/securing-elasticsearch-kibana-with-nginx.

If you’re not too concerned for security, just install apache2. the easiest way is to use tasksel and select “Web server”

[text]

sudo tasksel

[/text]

Download kibana, extract, copy to /var/www/

[text]

wget https://download.elasticsearch.org/kibana/kibana/kibana-3.0.0milestone4.tar.gz

tar xzvf kibana-3.0.0milestone4.tar.gz

sudo cp -r kibana-3.0.0milestone4/* /var/www/

[/text]

That’s it, this part is done. Now to get the logs into this logstash.

See http://www.ragingcomputer.com/2014/02/sending-windows-event-logs-to-logstash-elasticsearch-kibana-with-nxlog

Hey man, really enjoying your setup. Elasticsearch provides official Debian repositories with both Elasticsearch and Logstash. You might want to install that to help you maintain the setup as new releases roll out instead of managing them manually. http://www.elasticsearch.org/blog/apt-and-yum-repositories/

LikeLike

Great write-up, very thorough for a general purpose build. Works great with the versions specified, thanks!

There are a few changes that break in this setup on the latest release of Logstash, however. You’ll need the latest version of ElasticSearch, which it checks for now that ES owns Logstash and Kibana. This should be extracted from the available tar, then preferably serviced using ES wrapper. The only other change would be in your Logstash init script, for version 1.4.0 at the $command variable it should read:

command=”${logstash_bin} — agent -f $logstash_conf -l ${logstash_log}”

This takes care of any start-stop-daemon issues, and actually runs the engine with an agent.

LikeLike

Hi

Thanks for your very usefull article !

Just a question (for now !) – could you please clarify the purpose/added value of usig REDIS between the LOGSTASH-INDEXER and ELASTICSEARCH ?

Is it because these 2 logical components are deployed on 2 distinguished servers ?

LikeLike

Can you share the Kibana dashboard configuration that your using for the photo posted above? That would be great!

LikeLike

Hello,

I copied your input and nxlog.conf to Windows, but i receive this as log

message:

\u0016\u0003\u0001\u0000[\u0001\u0000\u0000W\u0003\u0001U\xDB\\ \xDD\xD30\u0005\u0019])\u0014\u001F\xA5\xAF\xF7\xC4\xE7\xB4\xD8Wk6Y\x97\xF3_mINh\xE6\u0000\u0000*\u00009\u00008\u00005\u0000\u0016\u0000\u0013\u0000

@version:

1

@timestamp:

August 24th 2015, 15:02:06.521

host:

%{host2}

type:

WindowsEventLog

tags:

[“_grokparsefailure”,”_jsonparsefailure”]

_source:

{“message”:”\\u0016\\u0003\\u0001\\u0000[\\u0001\\u0000\\u0000W\\u0003\\u0001U\\xDB\\\\ \\xDD\\xD30\\u0005\\u0019])\\u0014\\u001F\\xA5\\xAF\\xF7\\xC4\\xE7\\xB4

The only difference i output as om_ssl

Module om_ssl

Host logserver

Port 3515

CAFile %CERTDIR%\logstash-forwarder.crt

LikeLike

Hi. Thanks for the great write up.

I appear to be hitting the issue you had where Logstash is putting the entire json event from nxlog in to the message field

I can’t seem to get around the issue. What fixed it for you in the end?

Thanks for you assistance.

LikeLike

Can I use (install) Logstash / Elasticsearch / Kibana in Windows 8 or Windows Server 2008-2012 R2 ?

LikeLike